This post is also available in: 繁體中文 (Chinese (Traditional)) 日本語 (Japanese) 한국어 (Korean)

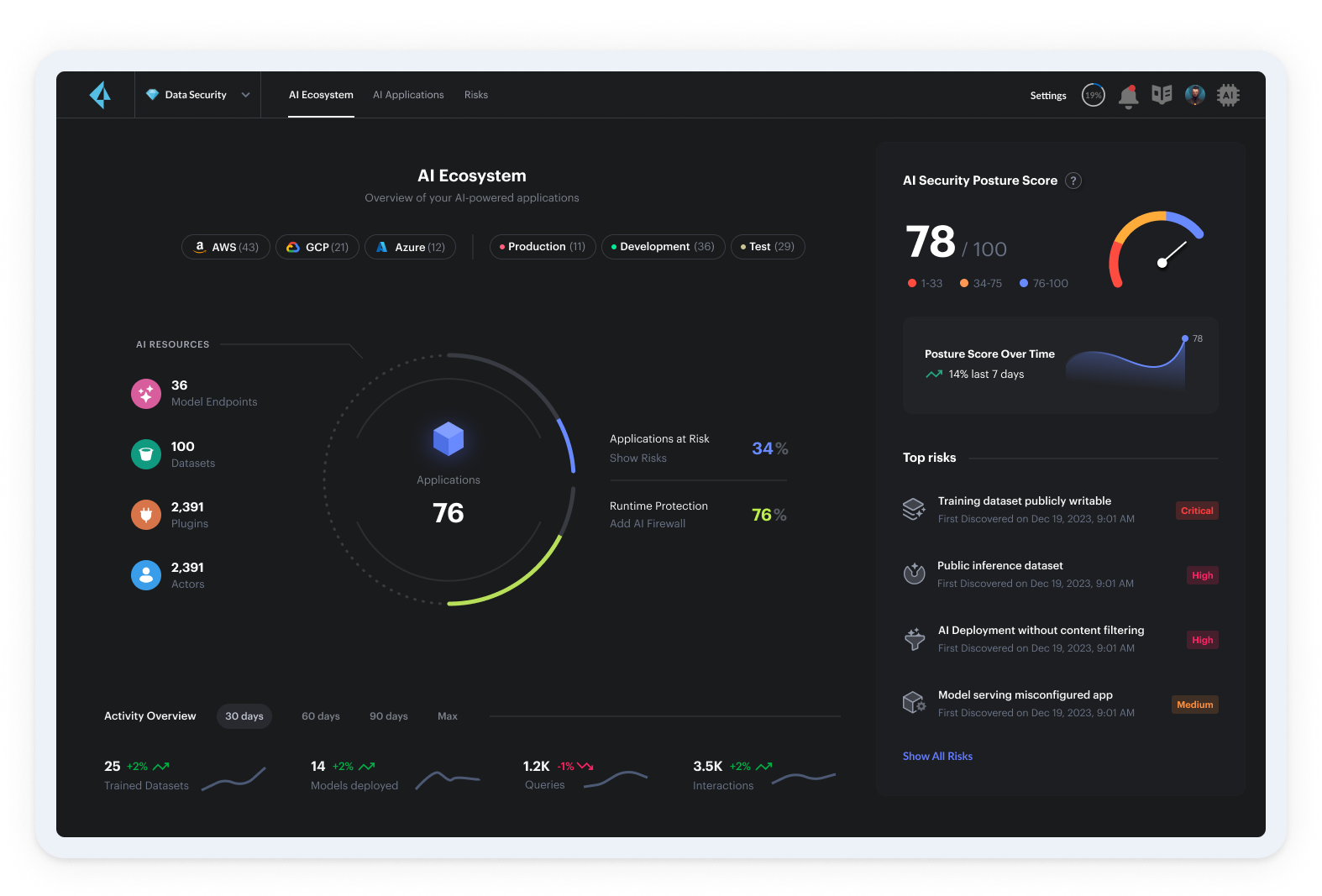

As artificial intelligence (AI) becomes ubiquitous, it introduces security challenges that have never been considered. Today we announce the general availability of AI security posture management (AI-SPM), a new set of capabilities that addresses those challenges — model risk, data exposure and potential misuse within AI environments, for example.

To address the unique challenges of deploying AI and Gen AI at scale while helping reduce security and compliance risks, we are pleased to announce Prisma® Cloud AI-SPM.

Addressing AI Risk with AI-SPM

AI-SPM is an emerging category of tools designed to help organizations protect against the unique risks associated with AI, ML and GenAI models, including data exposure, misuse and model vulnerabilities. AI-SPM takes elements from existing security posture management approaches like data security posture management (DSPM), cloud security posture management (CSPM), and cloud infrastructure entitlement management (CIEM), and adapts them to address the specific challenges of AI systems in production environments. At Palo Alto Networks®, we see this as an extension and an integrated part of our Code to Cloud™ approach.

AI-SPM tools provide visibility into the AI model lifecycle, from data ingestion and training to deployment. By analyzing model behavior, data flows and system interactions, AI-SPM helps identify potential security and compliance risks that may not be apparent through traditional risk analysis and detection tools. Organizations can use these insights to enforce policies and best practices, ensuring that AI systems are deployed in a secure and compliant manner.

Additionally, AI-SPM can monitor for AI-specific threats like data poisoning, model theft and proper output handling, alerting security teams to potential incidents and providing guidance on remediation steps. As regulations around AI continue to evolve, AI-SPM can also help organizations stay ahead of compliance requirements by embedding privacy and acceptable use considerations into the AI development process.

A key backbone for AI-SPM is Precision AI, Palo Alto Networks proprietary AI system, helping security teams trust AI outcomes by using rich data and security-specific models to automate detection, prevention and remediation. Precision AI by Palo Alto Networks is a powerful, proprietary AI system designed to instill trust in AI outcomes for security teams. By harnessing rich data and leveraging security-specific models, Precision AI automates detection, prevention, and remediation processes with exceptional accuracy. Palo Alto Networks innovative technology serves as a backbone for AI-driven security solutions, ensuring streamlined efforts and reliable results in today's complex threat landscape.

Key Capabilities of AI-SPM

AI security, like the broader AI ecosystem, is still evolving. The lines between AI-SPM, data loss protection and data security aren’t always clear. But we can define several essential capabilities of an AI-SPM solution.

1. AI Model Discovery and Inventory

Why it's needed: Different teams in an organization may deploy managed, semi-managed and unmanaged models. This creates model sprawl, unauthorized model usage, lack of governance, and shadow AI risks. Security teams need oversight of the ways in which AI is deployed to effectively monitor usage and prevent downstream risk.

Capability: AI-SPM tools enable the discovery of deployed models across the organization, visualization of associated resources (including compute, data, applications), and end-to-end visibility into the AI pipeline. This can help identify potential risks, enforce governance policies, and ensure that only authorized models are deployed in production environments.

Sample scenario: As part of an internal test, an open-source LLM without content guardrails is deployed on a virtual machine. Over time, usage of this model becomes commonplace, including for sensitive use cases. The AI-SPM tool can detect that this model is running and which principals are accessing it.

2. Data Exposure Prevention

Why it's needed: AI models, particularly LLMs, are trained on vast amounts of data that may contain sensitive information, personally identifiable information (PII), or other regulated content. This can be inadvertent or due to data poisoning attacks that manipulate training data to introduce biases or vulnerabilities into the model. While the use of third-party models supposedly “outsources” this risk to the model provider, it can re-emerge if the organization’s own data is used for fine-tuning or inference.

Once sensitive data becomes part of a model, it is very hard to detect (returning to the black box issue) and can potentially be exposed through regular model responses or prompt injection attacks.

Capability: AI-SPM tools provide data discovery and classification capabilities specifically designed for AI inference and training data. These tools can identify exposed data resources, such as unsecured storage buckets or databases, and alert security teams to potential data leaks or unauthorized access. AI-SPM can also monitor data flows to ensure that sensitive information is not being inadvertently ingested into AI models or exposed through model outputs.

Sample scenario: A researcher is using a cloud storage bucket to store training data for a new AI model. AI-SPM discovers that the bucket contains customer PII. The security team is alerted and can work with the researcher to anonymize the data and ensure compliance with data protection regulations.

3. Posture and Risk Analysis

Why it's needed: AI systems are complex and often involve multiple components, such as data pipelines, training environments and deployment infrastructure. Misconfigurations or weak access controls in any of these components can introduce significant security risks. AI models may also be vulnerable to prompt injection, extraction, or denial-of-service attacks if not properly secured. Finally, compliance with evolving regulations requires ongoing monitoring and assessment of AI systems.

Capability: AI-SPM tools analyze the configuration and access control of AI systems to identify potential risks and vulnerabilities. This includes assessing the security posture of datasets, AI deployments and cloud resources in the app ecosystem. AI-SPM can also integrate with DSPM tools to enrich the analysis with contextual data about sensitive information flows, which enables effective prioritization of detected vulnerabilities.

Sample scenario: An AI development team is managing multiple customer-facing and internal models. Due to a misconfiguration on the application level, an internal chatbot that has access to protected intellectual property is made open to the public. AI-SPM detects that the model's API endpoints are publicly accessible without authentication and the model is using protected data. The relevant development team is alerted and can implement least-privileged access controls and authentication mechanisms.

Get Started with Prisma Cloud AI-SPM

Securing AI in production is a new challenge, and it comes at a time when security teams are overstretched and struggling to tackle a rapidly changing threat landscape. Few security leaders, though, have the desire or ability to impede adoption of AI and the value it unlocks for organizations.

Prisma® Cloud AI-SPM provides the visibility and control organizations need as they adopt new AI models and tooling. Our Code to Cloud platform allows for a tightly integrated solution that builds on Prisma Cloud’s existing DSPM and CNAPP capabilities, streamlining security efforts, reducing tool bloat and enabling quick time to value.

Learn More

Enhance your organization's security and stay ahead of emerging threats by downloading our in-depth whitepaper on AI governance, designed specifically for security professionals seeking to implement effective AI strategies. And don't forget to request a demo to see first-hand why you need AI-SPM.