Artificial intelligence (AI) is advancing rapidly, with organizations across myriad industries deploying AI-powered applications at an unprecedented pace. In The State of Cloud-Native Security Report 2024, for example, 100% of survey respondents said they’re embracing AI-assisted application development, an astonishing result for a technology many considered science fiction just a few years ago. But while AI systems offer significant benefits, they also introduce novel security risks and governance challenges that traditional cybersecurity approaches are ill-equipped to handle.

Current AI Landscape and Its Security Implications

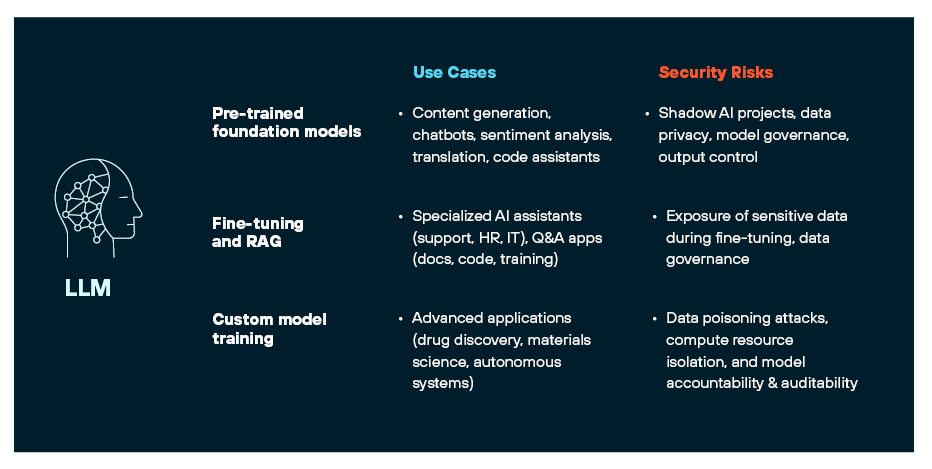

How Security Leaders Should Conceptualize AI Risk

Cybersecurity teams are used to playing catch-up. More often than not, they have to adapt their strategies and controls to keep pace with the adoption of new technologies — e.g., cloud computing, containerization and serverless architectures. The rise of AI, however, presents new obstacles for cybersecurity, many of which are qualitatively different from those in the past.

Change Is Faster and More Extreme

AI is causing a seismic shift in organizations, and it’s happening far faster than previous transformations.

- New models, techniques and applications are emerging at a breakneck speed, with major breakthroughs occurring monthly or even weekly.

- Organizations are feeling immense pressure to quickly adopt and integrate these technologies to stay competitive and drive innovation.

- The availability of many tools through simple APIs and the rapid emergence of a supporting ecosystem of tools and frameworks have further accelerated adoption by removing the typical roadblocks caused by skill shortages.

This combination of rapid technological change and urgent business demand can pose unique security challenges. Compressed timelines make it difficult to assess risks thoroughly and to implement appropriate controls before AI systems are deployed. Security often becomes an afterthought in the rush to market. Best practices and regulatory guidance also struggle to keep up, which means security teams must adapt on the fly and make judgments without the benefit of industry consensus or clear standards.

These conditions, however, present an opportunity to secure AI by design. By embedding security and governance considerations into the AI development process from the outset, organizations can proactively mitigate risks and build more resilient AI systems. This requires close collaboration among security, legal and AI development teams to align with best practices and integrate necessary controls into the AI lifecycle.

Broader Implications

The potential impact of AI systems is far-reaching and not always well understood. AI models can automate high stake decisions, generate content with legal implications (e.g., use of copyright-protected material), and access vast amounts of sensitive data. The risks associated with AI, such as biased outcomes, privacy violations, intellectual property exposure and malicious use, require a fundamentally different risk management paradigm.

To address these broader implications, cybersecurity leaders must engage with stakeholders across legal, ethical and business functions. Collaborative governance structures need to be established to align with risk tolerance, to develop policies and guidelines, and to implement ongoing monitoring and auditing processes. Cybersecurity teams will have to work closely with data science and engineering teams to embed security and risk management into the AI development lifecycle.

New Types of Security and Compliance Oversight Are Required

Traditional cybersecurity frameworks are often focused on protecting data confidentiality, integrity and availability. However with AI, additional dimensions, such as fairness, transparency and accountability, come into play.

Emerging regulatory frameworks like the EU AI Act are placing new demands on organizations regarding oversight and governance mechanisms for AI systems. These regulations require companies to assess and mitigate the risks associated with AI applications, particularly in high-stake domains such as hiring, credit scoring and law enforcement. Compliance obligations may vary based on the specific use case and the level of risk involved. For instance, AI systems used for hiring decisions are likely to be subject to more stringent auditing and transparency requirements to ensure they’re not perpetuating biases or discrimination.

Security teams need to go beyond their traditional focus on access controls and data protection. They must work with legal and compliance teams to establish mechanisms for monitoring and validating the outputs and decisions made by AI models. This may involve implementing explainable AI techniques, conducting regular bias audits or maintaining detailed documentation of model inputs, outputs and decision logic to support compliance reporting and investigations.

Novel Challenges in Threat Detection and Remediation

The technical challenges of securing AI systems are novel and complex and apply to both detection and remediation.

New Threat Categories Require New Detection Approaches

As mentioned previously, security teams need to monitor not only the underlying data and model artifacts but also the actual outputs and behaviors of the AI system in production. This requires analyzing vast troves of unstructured data, and detecting novel threats such as data poisoning attacks, model evasion techniques and hidden biases that could manipulate the AI's outputs in harmful ways. Existing security tools are often ill-equipped to handle these AI-specific threats.

Remediation Is Far from Straightforward

Unlike traditional software vulnerabilities, which often can be patched with a few lines of code, AI models issues may require retraining the entire model from scratch to fix them. AI models learn from the data they’re trained on, which becomes deeply embedded in the model's parameters. If the training data is found to contain sensitive information, biases or malicious examples, removing or correcting the specific data points is not easy. The model must be completely retrained on a sanitized dataset, which can take weeks or months and may cost hundreds of thousands of dollars in compute resources and human effort.

Moreover, retraining a model is not a guaranteed solution, since it may degrade performance or introduce new issues. While there is ongoing research into machine unlearning, data removal and other techniques, they’re still nascent and not widely applicable. As a result, prevention and early detection of AI vulnerabilities is crucial, as reactive remediation can be prohibitively costly and time-consuming.

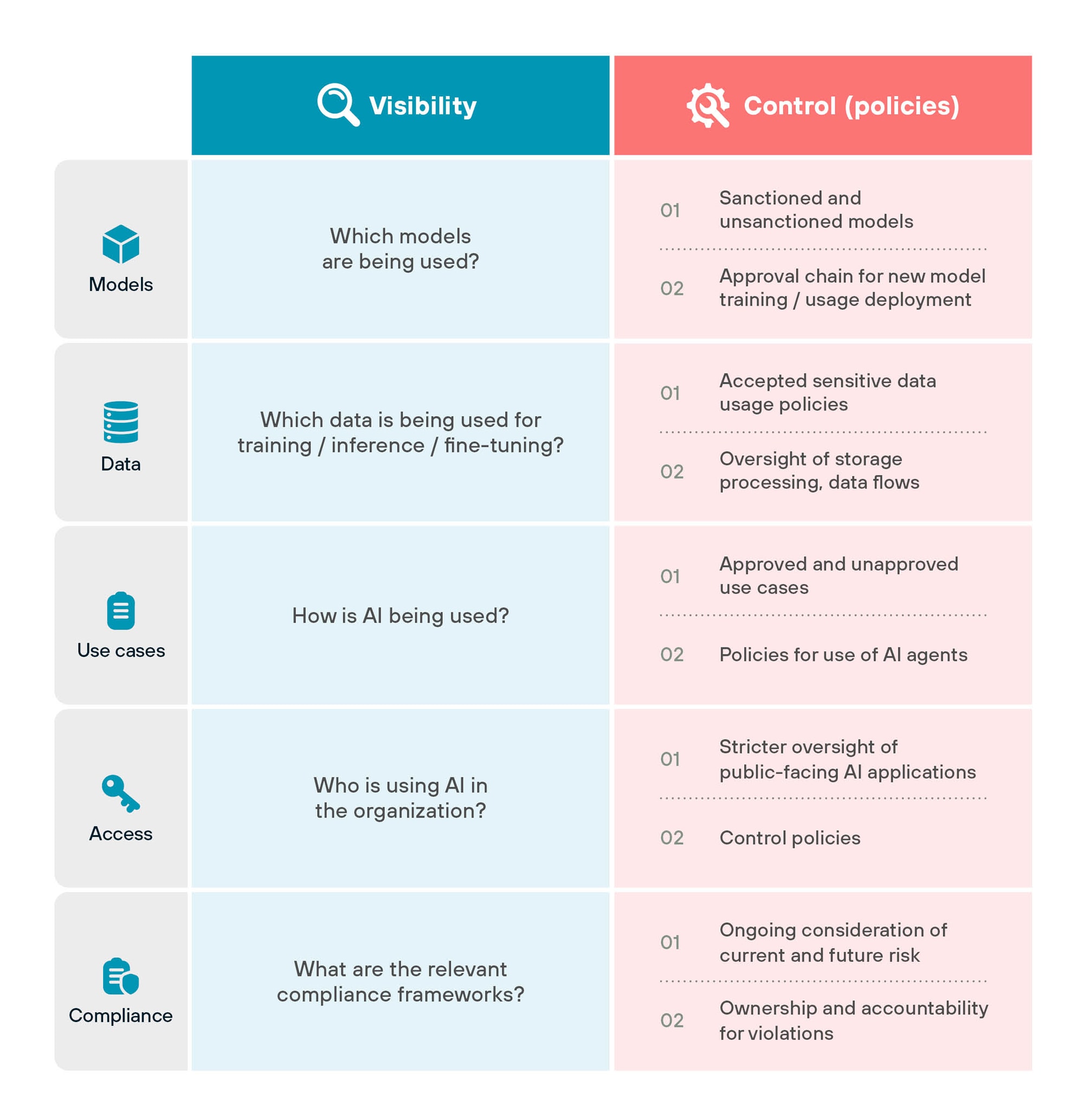

Suggested Governance Framework for AI-Powered Applications

To manage the risks and opportunities presented by AI-powered applications effectively, we advise organizations to adopt new governance frameworks that focus on two key aspects — visibility and control.

Visibility is about gaining a clear understanding of how AI is being used across the organization. This includes maintaining an inventory of all deployed AI models, tracking what data is being used to train and operate these models, and documenting the capabilities and access permissions of each model. Without this foundational visibility, it is impossible to assess risk or enforce policies.

Control refers to the policies, processes and technical safeguards that need to be established to ensure that AI is used responsibly and in alignment with organizational values. This encompasses data governance policies dictating what information can be used for AI, access controls restricting who can develop and deploy models, and monitoring and auditing to validate model behavior and performance.

The goal is to provide a structured approach for security leaders to collaborate with stakeholders across the organization — including security, compliance and engineering teams — to design and implement appropriate governance mechanisms for AI. Specific implementation details and policies can vary according to the organization’s requirements, priorities and local regulations.

Learn More

Equip your organization with the knowledge and tools necessary to effectively secure your AI-powered applications. Download our whitepaper, Establishing AI Governance for AI-Powered Applications, and start building a resilient and compliant AI governance framework today.

And Did You Know?

AI-SPM will be generally available to all Prisma Cloud users on Aug 06. If you haven’t tried Prisma Cloud, take it for a test drive with a free 30-day Prisma Cloud trial.