What Is Container Registry Security?

Container registry security focuses on protecting container registries, the centralized storage and distribution systems for container images. Container registries play a pivotal role in the container ecosystem, ensuring the integrity and security of containerized applications. Proper container registry security involves using trusted registries, implementing rigorous access control, monitoring for vulnerabilities, and securing the hosting server. Additionally, it requires denying insecure connections and removing stale images. By prioritizing container registry security, organizations can safeguard their containerized environments and maintain the trust of users and clients.

Container Registry Security Explained

Container registry security zeroes in on a critical component of the container ecosystem — the container registry. In the broader narrative of container security, the registry acts as the custodian of container images, the building blocks of containerized applications. As such, the container registry is more than a storage unit. It is, rather, a nexus point where the integrity of application images is both maintained and distributed.

Understanding Container Registries

In a containerized environment, as you know, applications with their dependencies are packaged into containers, making them portable and easy to deploy across platforms. Container registries serve this process by providing a location where container images can be versioned, retrieved, and deployed in a consistent manner.

The container registry, then, is a centralized storage and distribution system for container images. The registry allows developers and operations teams to store, manage, and share container images, which they’ll use to create and deploy containerized applications and microservices.

Public and Private Registries

Organizations may use a combination of public and private container registries to manage their container images, as well as other artifacts.

Public registries, such as Docker Hub and GitHub Container Registry, a sub-feature of GitHub Packages, offer a vast collection of open-source images that organizations can use as a foundation for their applications. These registries are generally accessible to anyone, making it easy for developers to find and use pre-built images.

But organizations often have specific requirements and proprietary software that necessitate the use of private registries.

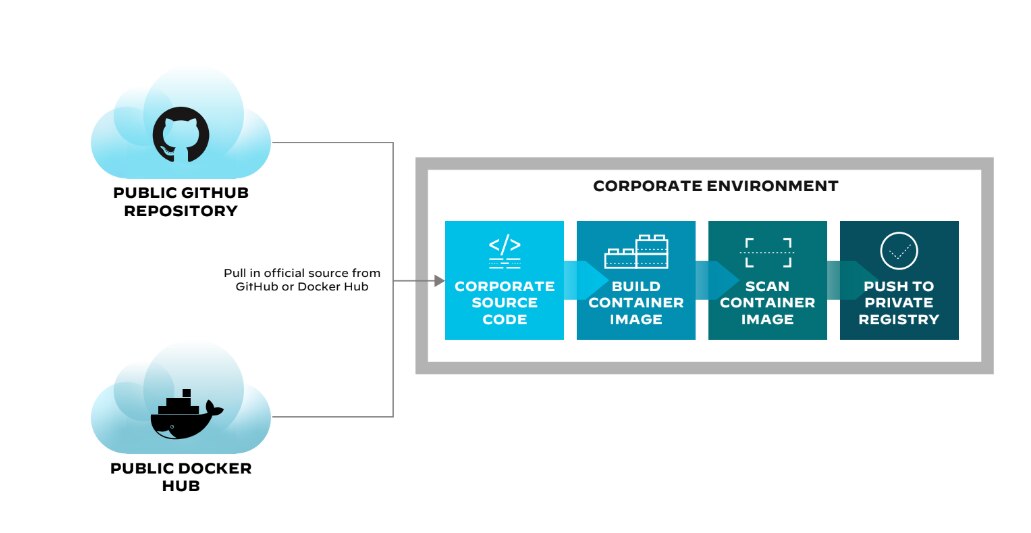

Figure 1: Container registry / repository public vs. private

Private container registries, such as Azure Container Registry, Amazon Elastic Container Registry, and Google Container Registry, provide secure, controlled environments for storing and managing proprietary images and related artifacts. These registries are accessible only to authorized users within the organization to help ensure that sensitive information and custom-built images remain secure.

By using a combination of public and private registries, organizations can leverage the benefits of open-source images while maintaining control over their proprietary software. This dual approach allows organizations to optimize their container management workflows and streamline the deployment process.

Components of Container Registry Security

Given that the registry is central to the way a containerized environment operates — and that organizations can easily have tens of thousands of images stored in them — securing the registry is integral to the integrity of the software development lifecycle.

Vulnerabilities can compromise more than the application. An attacker leveraging a misconfiguration could gain unauthorized access to the CI/CD system and move laterally to access the underlying OS. They could potentially manipulate legitimate CI/CD flows, obtain sensitive tokens, and move to the production environment where identifying an exposed credential might allow them to enter the organization’s network.

Related article: Anatomy of a Cloud Supply Pipeline Attack

Registry security begins with using only trusted registries and libraries. Continuous monitoring for vulnerability changes is foundational, along with securing the hosting server and implementing substantial access policies. Proper registry security should deny insecure connections, flag or remove stale images, and enforce stringent authentication and authorization restrictions.

Let’s look at these measures in greater detail.

Promoting Image and Artifact Integrity in CI/CD

Understanding the risks associated with images and artifacts drives home the importance of implementing staunch checks to ensure their integrity. Consider implementing the following strategies.

Deny Insecure Connections

While public registries may allow anonymous access to container images, to prevent man-in-the-middle attacks, unauthorized tampering, and unauthorized access to sensitive information, you must maintain secure connections.

To deny insecure connections, configure your systems to only accept secure protocols like HTTPS or TLS-encrypted connections. Start by obtaining and installing a valid SSL/TLS certificate from a trusted certificate authority (CA) for your domain. Then, update your server or service configuration to enforce the use of HTTPS or TLS, disabling insecure protocols like HTTP. Depending on your setup, this may involve adjusting settings in your web server (e.g., Nginx, Apache), load balancer, or application. Also, consider using security features like HSTS (HTTP Strict Transport Security) to instruct browsers to always use secure connections when accessing your site or service.

Remove Stale Images

Establish a policy to define stale images — images older than a specific time frame or unused for a certain period — and use registry tools or APIs to list and filter images based on the policy. For example, in Docker Registry, use the Docker Registry API to retrieve image metadata and filter by last pushed date or tag. In other registries, similar APIs or CLI tools may be available. Once you've identified the stale images, use the appropriate commands or APIs to delete them, ensuring you follow the registry's best practices for garbage collection.

Avoid IAM Issues in Third-Party Registries

Identity and access management (IAM) is crucial for organizations, particularly in source control management systems (SCM) like GitHub, where repositories store valuable code and assets. Inadequate IAM can lead to security risks in the CI/CD pipeline. To optimize security and governance for repositories, organizations can use single sign-on (SSO) and system for cross-domain identity management (SCIM) for managing access controls. SSO, however, is only available for GitHub Enterprise, leaving other licenses exposed to risks.

To mitigate issues involving private email addresses in GitHub accounts, ghost GitHub accounts, and incomplete offboarding, enforce two-factor authentication (2FA), establish an onboarding protocol with dedicated corporate accounts, and maintain an inventory of user accounts. For SSO-enabled organizations, implementing SCIM ensures automatic deprovisioning of users and eliminates access through stale credentials. Addressing IAM risks helps protect repositories, the CI/CD ecosystem, and maintain a high-security level across all systems.

Related article: Top 3 IAM Risks in Your GitHub Organization

Employ Sufficient Authentication and Authorization Restrictions

Identities granted more permissions than needed for the repository open opportunities for privilege escalation and can result in unauthorized code changes, tampering with the build process, and access to sensitive data. Automation can help validate access controls, check user permissions, and identify potential vulnerabilities, enabling organizations to enact routine proactive measures, such as:

- Analyzing and mapping all identities across the engineering ecosystem. For each identity, continuously map the identity provider, permissions granted, and permissions used. Ensure that analysis covers all programmatic access methods.

- Removing unnecessary permissions for each identity across various systems in the environment.

- Establishing an acceptable period for disabling or removing stale accounts. Disable and remove identities that exceed this inactivity period.

- Mapping all external collaborators and aligning their identities with the principle of least privilege. When possible, grant permissions with an expiry date for human and programmatic accounts.

- Prohibiting employees from accessing SCMs, CIs, or other CI/CD platforms using their personal email addresses or addresses from domains not owned by the organization. Monitor non-domain addresses across different systems and remove non-compliant users.

- Disallowing users from self-registering to systems and granting permissions based on necessity.

- Avoiding granting base permissions in a system to all users and to large groups with automatically assigned user accounts.

- Creating dedicated accounts for each specific context, versus using shared accounts, and grant the exact set of permissions required for the given context.

Implement Secure Storage

Establish a secure tamper-proof repository to store artifacts. Enable versioning to maintain a historical record of artifact changes and implement real-time monitoring to track and alert on suspicious activity. In case of compromised artifacts, configure the system to facilitate rollbacks to previous, known-good versions.

Conduct Integrity Validation Checks from Development to Production

Implement processes and technologies that validate resource integrity throughout the software delivery chain. As developers generate a resource, they should sign it using an external resource signing infrastructure. Before consuming a resource in subsequent pipeline stages, cross-check its integrity against the signing authority.

Code Signing

SCM solutions offer the capability to sign commits with a unique key for each contributor, preventing unsigned commits from progressing through the pipeline.

Artifact Verification Software

Tools designed for signing and verifying code and artifacts, such as the Linux Foundation's Sigstore, can thwart unverified software from advancing down the pipeline.

Configuration Drift Detection

Implement measures to detect configuration drifts, such as resources in cloud environments not managed using a signed infrastructure-as-code template. Such drifts could indicate deployments from untrusted sources or processes.

Employ Cryptographic Signing

Use public key infrastructure (PKI) to cryptographically sign artifacts at each stage of the CI/CD pipeline. This practice validates signatures against a trusted certificate authority before consumption. Configure your CI/CD pipeline to reject artifacts with invalid or missing signatures to reduce risks of deploying tampered resources or unauthorized changes.

Use Only Secured Container Images

Container images can contain vulnerabilities that attackers can exploit to gain unauthorized access to the container and its host. To prevent this, use secure, trusted container images from reputable sources and scan them regularly. When deploying a container from a public registry, it’s particularly important to first scan the container for malware and vulnerabilities.

Enforce Multi-Source Validation

Adopt a multisource validation strategy that verifies the integrity of artifacts using various sources, such as checksums, digital signatures, and secure hash algorithms, as well as trusted repositories. Keep the cryptographic algorithms and keys up to date to maintain their effectiveness.

Third-Party Resource Validation

Third-party resources incorporated into build and deploy pipelines, like scripts executed during the build process, should undergo rigorous validation. Before utilizing these resources, compute their hash and compare it against the official hash provided by the resource provider.

Integrate Security Scanning

The CI/CD pipeline should use only vetted code (production approved) when creating images. Incorporate vulnerability scanning tools — as well as software composition analysis (SCA) and static application security testing (SAST) — into the CI/CD pipeline to ensure image integrity before pushing images to the registry from which production deployment will pull them. Be sure, as well, to follow best practices. Don’t build images, for instance, before removing all unnecessary software components, libraries, configuration files, secrets, etc.

Taking a conservative, cautious approach will allow teams to address vulnerabilities early in the development process and maintain a high level of code quality while reducing the risk of security incidents. Choose a container image scanning solution that can integrate with all registry types. Platforms like Prisma Cloud provide administrators with a flexible, one-stop image scanning solution.

Image Analysis Sandbox

Using an image analysis sandbox will enhance your container security strategy during the development and deployment of containerized applications, allowing you to safely pull and run container images that possibly contain outdated, vulnerable packages and embedded malware from external repositories.

The sandbox’s capabilities will allow you to scan for suspicious anomalous container behavior like cryptominers, port scanning, modified binaries, and kernel module modifications in a controlled environment. You can expose risks and identify suspicious dependencies buried in your software supply chain that static analysis otherwise would have missed.

- Capture detailed runtime profile of the container

- Assess the risk of an image

- Incorporate dynamic analysis in your workflow

Establish Validation Policies and Audit Schedule

To ensure proper image and artifact integrity validation, organizations should establish clear policies that define validation processes. Once established, regularly audit compliance with internal policies to identify and address weaknesses, as well as areas of noncompliance. Continuous monitoring and analysis will help detect anomalies and unauthorized activities.

At-a-Glance Container Registry Security Checklist

- Use trusted registries and libraries

- Secure hosting server and implement robust access policies

- Implement sufficient authentication and authorization restrictions

- Establish secure storage for artifacts

- Perform integrity validation checks throughout CI/CD

- Employ cryptographic signing

- Enforce multi-source validation

- Validate third-party resources

- Integrate security scanning in the CI/CD pipeline

- Establish validation policies and regular audit schedules

Container Registry FAQs

A CI/CD pipeline automates the steps involved in getting software from version control into the hands of end users. It encompasses continuous integration (CI) and continuous deployment (CD), automating the process of software delivery and infrastructure changes. The pipeline typically includes stages like code compilation, unit testing, integration testing, and deployment. This automation ensures that software is always in a deployable state, facilitating rapid and reliable software release cycles. CI/CD pipelines are essential for DevOps practices, enabling teams to deliver code changes more frequently and reliably.

- SCM helps maintain the consistency and traceability of code used to build container images, allowing developers to easily identify the specific code version used to create a container image.

- SCM enables developers to collaborate on code, ensuring that the container images built and stored in the registry meet the organization's quality requirements.

- SCM tools enhance workflow by integrating with CI/CD pipelines and automating the process of building, testing, and pushing container images to the registry.

A webhook is a method for augmenting or altering the behavior of a webpage or web application with custom callbacks. These callbacks may be maintained, modified, and managed by third-party users and developers who don’t necessarily have access to the source code of the webpage or application. In cloud computing and DevOps, webhooks are used to trigger automated workflows, such as CI/CD pipelines, when specific events occur in a repository or deployment environment. Webhooks enable real-time notifications and automatic reactions to events, enhancing automation and integration between cloud services and tools.

Notary is an open-source tool that provides a framework for publishing and verifying the signatures of content, such as container images. It implements The Update Framework (TUF) specifications for secure content delivery and updates. Notary ensures that the content a user receives is exactly what the publisher intended, safeguarding against unauthorized modifications.

Notary is commonly used in conjunction with Docker Content Trust to sign and verify Docker images.