What Are Large Language Models (LLMs)?

Large language models (LLMs) are a cutting-edge natural language processing (NLP) development designed to understand and generate human language. LLMs are advanced AI models trained on vast amounts of text data, enabling them to recognize linguistic patterns, comprehend context, and produce coherent and contextually relevant responses. While NLP provides the foundational techniques for machines to grapple with language, LLMs represent a specialized approach that has significantly enhanced the machine’s capability to mimic human-like language understanding and generation..

What Are Some Current LLMs?

LLMs represent the frontier of natural language processing, and several models currently dominate the space, including Google’s Gemini, Meta’s Galactica and Llama, OpenAI’s GPT series, and others like Falcon 40B and Phi-1. With varying architectures and parameter sizes, these models excel at tasks ranging from answering queries to generating coherent and contextually relevant text over long passages.

BERT, introduced by Google, laid the foundational groundwork with its transformer-based architecture. On the other hand, Meta’s Galactica, a recent entrant, explicitly targets the scientific community while facing scrutiny for producing misleading “hallucinations” that could have profound implications in the scientific domain. Meanwhile, OpenAI’s GPT series, especially GPT-3 and GPT-4, have been groundbreaking in their capacity, with the latter rumored to contain over 170 trillion parameters and abilities to process both text and images. This model’s prowess led to speculations about nearing artificial general intelligence (AGI), a theoretical machine capability on par with or exceeding human intelligence.

Challenges, however, persist. The sheer scale and complexity of these models can lead to unpredictable outputs, and their immense training requirements raise concerns about environmental sustainability and biased results.

Amidst concerns, though, the evolution of LLMs promises advancements in diverse sectors — from mundane tasks like web search improvements to critical areas like medical research and cybersecurity. As the field advances, the balance between potential and caution remains paramount.

How LLMs Work

To excel in understanding and generating human-like language, LLMs use a combination of neural networks, vast training datasets, and an architecture called transformers.

Neural Networks

At the core of large language models are neural networks with multiple layers, known as deep learning models. These networks consist of interconnected nodes, or neurons, that learn to recognize patterns in the input data during the training phase. LLMs are trained on a massive body of text, encompassing diverse sources such as websites, books, and articles, allowing them to learn grammar, syntax, semantics, and contextual information.

On the backs of algorithms designed to recognize patterns, neural networks interpret sensory data through a kind of machine perception, labeling, or clustering of raw input. The architectures of neural networks range from simple feedforward networks, where connections between the nodes don’t form a cycle, to complex structures with sophisticated layers and multiple feedback loops.

Convolutional Neural Networks (CNNs): These are particularly effective for processing data with a grid-like topology. Examples include image data, which can be thought of as a 2D grid of pixels.

Recurrent Neural Networks (RNNs): These are suited for sequential data like text and speech. The output at each step depends on the previous computations and a certain kind of memory about what has been processed so far.

Transformers

The transformer architecture is a critical component of LLMs, introduced by Vaswani et al. in 2017. Transformers address the limitations of earlier sequence models like RNNs and LSTMs, which struggled with long-range dependencies and parallelization. Transformers employ a mechanism called self-attention, which enables the model to weigh the importance of different words in the input context and capture relationships between them, regardless of their distance in the sequence.

Tokenization

Tokenization is the first step in processing text with an LLM. The input text is broken down into smaller units called tokens, which are then converted into numerical representations (vectors) that the neural network can process. During training, the model learns to generate contextually appropriate output tokens based on the input tokens and their relationships.

The training process involves adjusting the weights of the neural network connections through a technique called backpropagation. By minimizing the difference between the model's predictions and the actual target tokens in the training data, the model learns to generate more accurate and coherent language.

Once trained, large language models can be fine-tuned on specific tasks or domains, such as sentiment analysis, summarization, or question-answering, by training the model for a short period on a smaller, task-specific dataset. This process enables the LLM to adapt its generalized language understanding to the nuances and requirements of the target task.

Related Article: Artificial Intelligence Explained

Benefits of LLMs

Large language models offer a wide range of benefits, including:

- Advanced Natural Language Understanding: LLMs can understand context and nuances in language, making their responses more relevant and human-like.

- Versatility: LLMs can be applied to various tasks, such as text generation, summarization, translation, and question answering, without requiring task-specific training.

- Translation: LLMs trained in multiple languages can effectively translate between them. Some theorize that they could even derive meanings from unknown or lost languages based on patterns.

- Automating Mundane Tasks: LLMs can perform text-related tasks like summarizing, rephrasing, and generating content, which can be especially useful for businesses and content creators.

- Emergent Abilities: Due to the vast amount of data they’re trained on, LLMs can exhibit unexpected but impressive capabilities, such as multi-step arithmetic, answering complex questions, and generating chain-of-thought prompts.

- Debugging and Coding: In cybersecurity, LLMs can assist in writing and debugging code more rapidly than traditional methods.

- Analysis of Threat Patterns: In cybersecurity, LLMs can identify patterns related to Advanced Persistent Threats, aiding incident attribution and real-time mitigation.

- Response Automation: In Security Operations Centers, LLMs can automate responses, generate scripts and tools, and assist in report writing, reducing the time security professionals spend on routine tasks.

Despite these benefits, it’s essential to remember that LLMs have drawbacks and ethical considerations that must be managed.

Challenges with LLMs

While it is easy to get caught up in the benefits delivered by an LLM’s impressive linguistic capabilities, organizations must also be aware and prepared to address the potential challenges that come with them.

Operational Challenges

- Hallucination: LLMs can sometimes produce bizarre, untrue outputs or give an impression of sentience. These outputs aren’t based on the model’s training data and are termed “hallucinations.”

- Bias: If an LLM is trained on biased data, its outputs can be discriminatory or biased against certain groups, races, or classes. Even post-training, biases can evolve based on user interactions. Microsoft’s Tay is a notorious example of how bias can manifest and escalate.

- Glitch Tokens or Adversarial Examples: These are specific inputs crafted to make the model produce erroneous or misleading outputs, effectively causing the model to “malfunction.”

- Lack of Explainability: It can be challenging to understand how LLMs make certain decisions or generate specific outputs, making it hard to troubleshoot or refine them.

- Over-Reliance: As LLMs become more integrated into various sectors, there’s a risk of excessive reliance, potentially sidelining human expertise and intuition.

LLM Use Cases & Deployment Options

LLMs offer organizations a number of optional implementation patterns, each relying on a different set of tools and related security implications.

Use of Pre-Trained LLMs

Cloud providers like OpenAI and Anthropic offer API access to powerful LLMs that they manage and secure. Organizations can leverage these APIs to incorporate LLM capabilities into their applications without having to manage the underlying infrastructure.

Alternatively, open-source LLMs such as Meta’s LLaMa can be run on an organization's own infrastructure, providing more control and customization options. On the downside, open-source LLMs require significant compute resources and AI expertise to implement and maintain securely.

LLMs Deployment Models

- API-based SaaS: The infrastructure is provided and managed by the LLM developer (e.g., OpenAI) and provisioned via a public API.

- CSP-managed: The LLM is deployed on infrastructure provided by cloud hyperscalers and can run in a private or public cloud, such as Azure, OpenAI, and Amazon Bedrock.

- Self-managed: The LLM is deployed on the company’s own infrastructure, which is relevant only for open-source or homegrown models.

Pre-trained LLMs offer various functionalities — content generation, chatbots, sentiment analysis, language translation, and code assistants. An e-commerce company might use an LLM to generate product descriptions, while a software development firm could leverage an LLM-powered coding assistant to boost programmer productivity.

Security Implications Associated with Pre-Trained LLMs

The availability of easily accessible cloud APIs and open-source models has dramatically lowered the barriers to adding advanced AI language capabilities to applications. Developers can now plug LLMs into their software without maintaining deep expertise in AI and ML. While this accelerates innovation, it increases the risk of shadow AI projects that lack proper security and compliance oversight. Development teams, meanwhile, may be experimenting with LLMs without fully considering data privacy, model governance, and output control issues.

Fine-Tuning and Retrieval-Augmented Generation (RAG)

To customize LLMs for specific applications, organizations can fine-tune them on smaller datasets related to the desired task or implement RAG, which involves integrating LLMs with knowledge bases for question-answering and content summarization.

Use cases for these include specialized AI assistants with access to internal data (e.g., for customer support, HR, or IT helpdesk) and Q&A apps (e.g., for documentation, code repositories, or training materials). For example, a telecommunication company's customer service chatbot could be fine-tuned on product documentation, FAQs, or past support interactions to better assist customers with technical issues and account management.

Security Implications Associated with Fine-tuning and RAG

Fine-tuning and RAG allow organizations to adapt LLMs to their specific domain and data, enabling more targeted and accurate outputs. However, this customization process often involves exposing the model to sensitive internal information during training. Strong data governance practices are required to ensure that only authorized data is used for fine-tuning and that the resulting models are secured.

Model Training

Some large technology companies and research institutions choose to invest in training their own LLMs. While this is a highly resource-intensive process that requires massive compute power and datasets, it gives organizations full control over the model architecture, training data, and optimization process. Additionally, the organization maintains full intellectual property rights over the resulting models.

Model training can result in advanced applications like drug discovery, materials science, or autonomous systems. A healthcare organization could develop a model to help diagnose diseases from medical records and imaging data, for example.

Security Implications Associated with Model Training

Training custom LLMs raises difficult questions regarding how to maintain accountability and auditability of model behavior when dealing with complex black-box models. The training process itself consumes enormous compute resources, necessitating strong isolation and access controls around the training environment to prevent abuse or interference. First, the organization must build a high-performance computing infrastructure and carefully curate massive datasets, which can introduce new security challenges.

LLM Security Concerns

A primary concern with deploying large language models in enterprise settings is potentially including sensitive data during training. Once data has been incorporated into these models, it becomes challenging to discern precisely what information was fed into them. This lack of visibility can be problematic when considering the myriad data sources used for training and the various individuals that could access this data.

Ensuring visibility into the data sources and maintaining strict control over who has access to them is crucial to prevent unintentional exposure of confidential information.

An additional concern is the potential misuse of LLMs in cyberattacks. Malicious actors can utilize LLMs to craft persuasive phishing emails to deceive individuals and gain unauthorized access to sensitive data. This method, known as social engineering, has the potential to create compelling and deceptive content, escalating the challenges of data protection.

Without rigorous access controls and safeguards, the risk of significant data breaches increases, with malicious actors gaining the ability to spread misinformation, propaganda, or other harmful content with ease.

While LLMs have near-infinite positive applications, they harbor the potential to create malicious code, bypassing conventional filters to prevent such behaviors. This susceptibility could lead to a new era of cyberthreats where data leaks aren’t just about stealing information but generating dangerous content and codes.

If manipulated, for instance, LLMs can produce malicious software, scripts, or tools that can jeopardize entire systems. Their potential for “reward hacking” raises alarms in the cybersecurity domain, suggesting unintended methods to fulfill their objectives could be discovered, leading to accidental access to or harvesting of sensitive data.

As we rely more on LLM applications, it becomes imperative for organizations and individuals to stay vigilant to these emerging threats, prepared to protect data at all times.

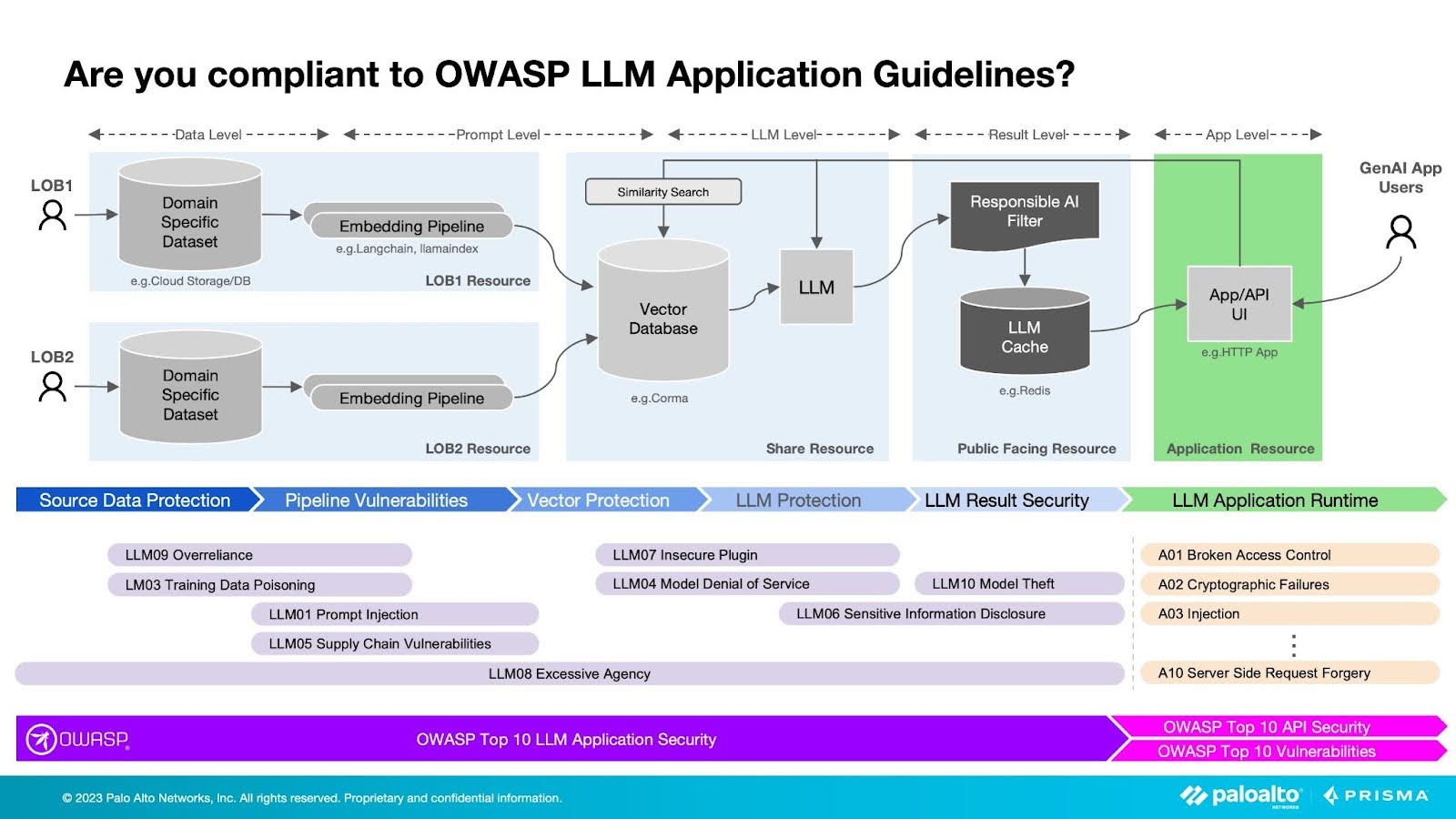

Figure 1: Securing LLMs from top OWASP security risks

The OWASP Top Ten: LLM Security Risks

Conventional application vulnerabilities present a new strain of security risks within LLMs. But true to form, OWASP delivered the OWASP Top Ten LLM Security Risks in timely fashion, alerting developers to new mechanisms and the need to adapt traditional remediation strategies for their applications utilizing LLMs.

LLM01: Prompt Injection

Prompt injection can manipulate a large language model through devious inputs, causing the LLM to execute the attacker's intentions. With direct injections, the bad actor overwrites system prompts. With indirect prompt injections, attackers manipulate inputs from external sources. Either method can result in data exfiltration, social engineering, and other issues.

LLM02: Insecure Output Handling

Insecure output handling is a vulnerability that occurs when an LLM output is accepted without scrutiny, exposing backend systems. It arises when a downstream component blindly accepts LLM output without effective scrutiny. Misuse can lead to cross-site scripting (XSS) and cross-site request forgery (CSRF) in web browsers, as well as server-side request forgery (SSRF), privilege escalation, and remote code execution on backend systems.

LLM03: Training Data Poisoning

Training data poisoning occurs when LLM training data is manipulated via Common Crawl, WebText, OpenWebText, books, and other sources. The manipulation introduces backdoors, vulnerabilities, or biases that compromise the LLM’s security and result in performance decline, downstream software exploitation, and reputational damage.

LLM04: Model Denial of Service

Model denial of service occurs when an attacker exploits a LLM to trigger a resource-intensive operation, leading to service degradation and increased costs. This vulnerability is amplified by the demanding nature of LLMs and the unpredictable nature of user inputs. In a model denial of service scenario, an attacker engages with an LLM in a manner that demands a disproportionate amount of resources, causing a decline in service quality for both the attacker and other users while potentially generating significant resource expenses.

LLM05: Supply Chain Vulnerabilities

Supply chain vulnerabilities in LLMs can compromise training data, ML models, and deployment platforms, causing security breaches or total system failures. Vulnerable components or services can arise from poisoned training data, insecure plugins, outdated software, or susceptible pretrained models.

LLM06: Sensitive Information Disclosure

LLM applications can expose sensitive data, confidential information, and proprietary algorithms, leading to unauthorized access, intellectual property theft, and data breaches. To mitigate these risks, LLM applications should employ data sanitization, implement appropriate strict user policies, and restrict the types of data returned by the LLM.

LLM07: Insecure Plugin Design

Plugins can comprise insecure inputs and insufficient access control, making them prone to malicious requests that can lead to data exfiltration, remote code execution, and privilege escalation. Developers must follow stringent parameterized inputs and secure access control guidelines to prevent exploitation.

LLM08: Excessive Agency

Excessive agency refers to LLM-based systems taking actions leading to unintentional consequences. The vulnerability stems from granting the LLM too much autonomy, over-functionality, or excessive permissions. Developers should limit plugin functionality to what is absolutely essential. They should also track user authorization, require human approval for all actions, and implement authorization in downstream systems.

LLM09: Overreliance

An LLM can generate inappropriate content when human users or systems excessively rely on the LLM without providing proper oversight. Potential consequences of LLM09 include misinformation, security vulnerabilities, and legal issues.

LLM10: Model Theft

LLM model theft involves unauthorized access, copying, or exfiltration of proprietary LLMs. Model theft results in financial loss and loss of competitive advantage, as well as reputation damage and unauthorized access to sensitive data. Organizations must enforce strict security measures to protect their proprietary LLMs.

Large Language Model FAQs

NLP is a subfield of AI and linguistics that focuses on enabling computers to understand, interpret, and generate human language. NLP encompasses a wide range of tasks, including sentiment analysis, machine translation, text summarization, and named entity recognition. NLP techniques typically involve computational algorithms, statistical modeling, and machine learning to process and analyze textual data.

A LLM is a type of deep learning model, specifically a neural network, designed to handle NLP tasks at a large scale. LLMs, such as GPT-3 and BERT, are trained on vast amounts of text data to learn complex language patterns, grammar, and semantics. These models leverage a technique called transformer architecture, enabling them to capture long-range dependencies and contextual information in language.

The primary difference between NLP and LLM is that NLP is a broader field encompassing various techniques and approaches for processing human language, while LLM is a specific type of neural network model designed for advanced NLP tasks. LLMs represent a state-of-the-art approach within the NLP domain, offering improved performance and capabilities in understanding and generating human-like language compared to traditional NLP methods.

Generative adversarial networks (GANs) are a class of machine learning models designed to generate new data samples that resemble a given dataset. GANs consist of two neural networks, a generator and a discriminator, that are trained simultaneously in a competitive manner. The generator creates synthetic samples, while the discriminator evaluates the generated samples and distinguishes them from real data.

The generator continually improves its data generation capabilities by attempting to deceive the discriminator, which, in turn, refines its ability to identify real versus generated samples. This adversarial process continues until the generated samples become nearly indistinguishable from the real data, making GANs particularly useful in applications like image synthesis, data augmentation, and style transfer.

Variational autoencoders (VAEs) are a type of generative model that learn to represent complex data distributions by encoding input data into a lower-dimensional latent space and then reconstructing the data from this compressed representation. VAEs consist of two neural networks: an encoder that maps the input data to a probability distribution in the latent space, and a decoder that reconstructs the data from sampled points in this distribution.

The VAE model is trained to minimize the reconstruction error and a regularization term that encourages the learned distribution to align with a predefined prior distribution. VAEs are capable of generating new data samples by decoding random points sampled from the latent space, making them suitable for applications such as image generation, data denoising, and representation learning.